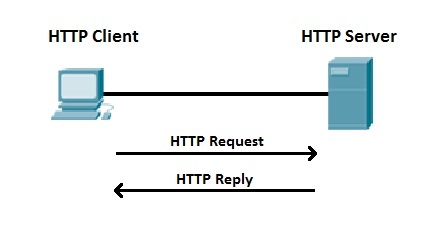

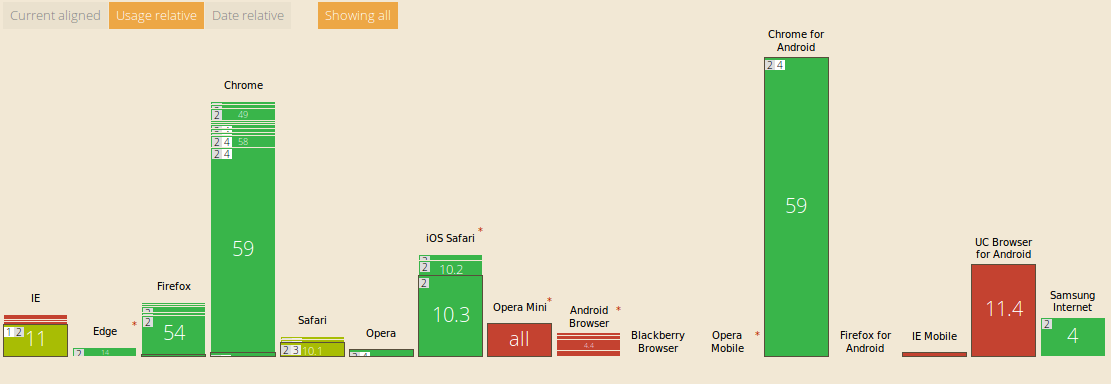

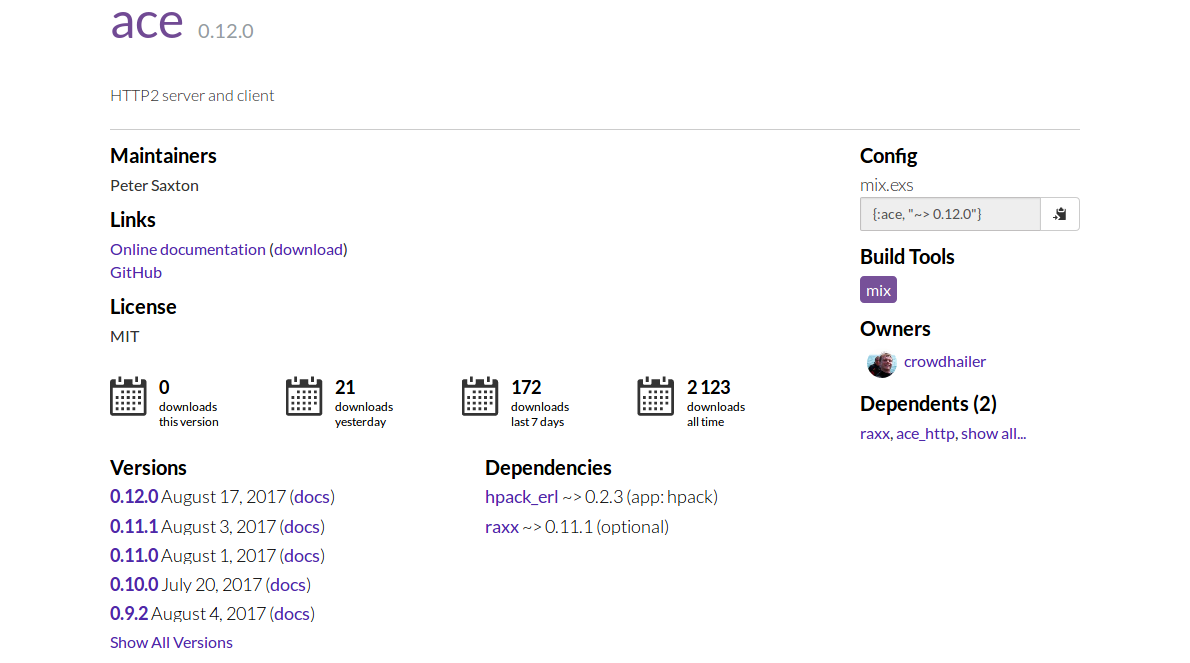

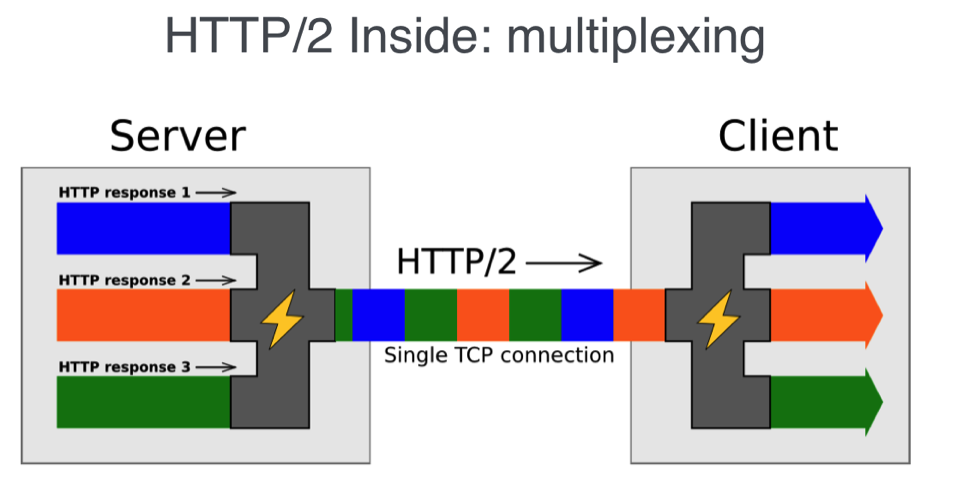

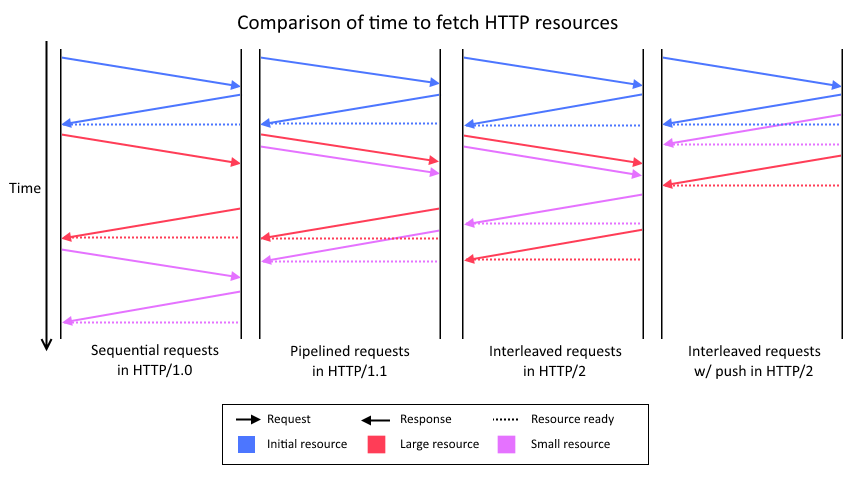

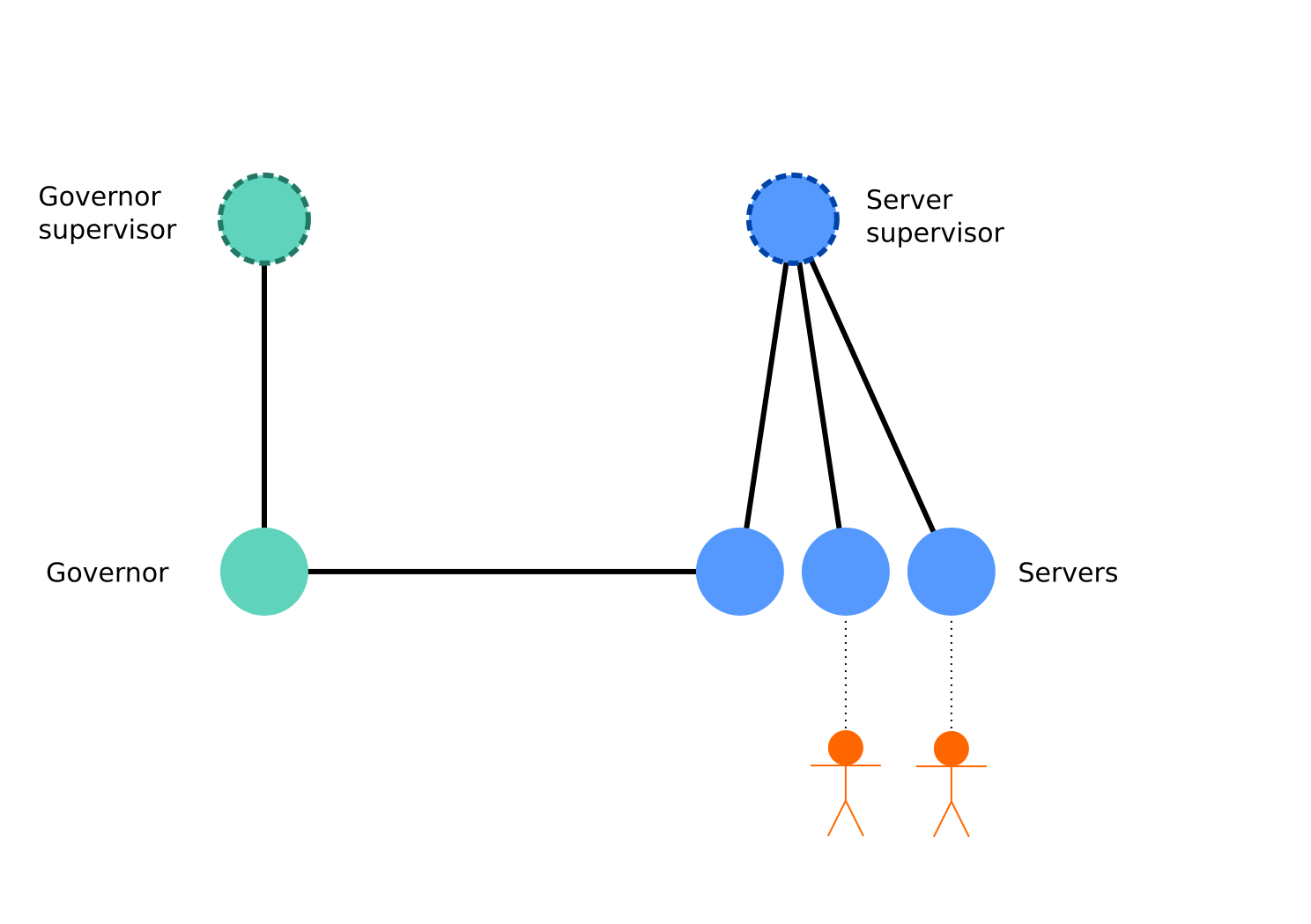

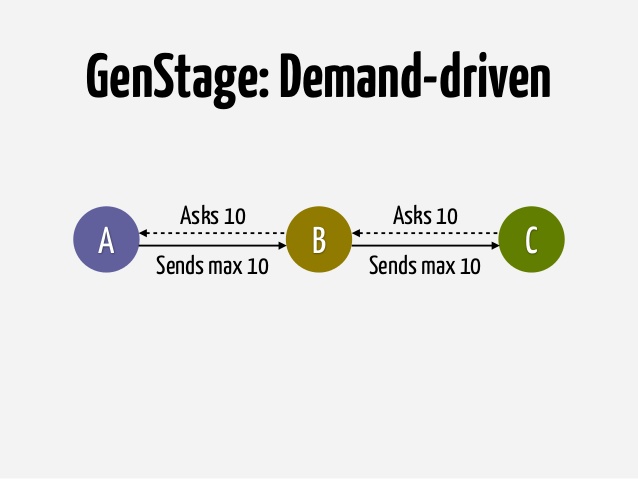

class: middle #.main[HTTP/2 in Elixir] - source https://github.com/CrowdHailer/Ace - slides http://crowdhailer.me ??? Any of the code samples I show here can be seen in context at this github repository --- class: middle, center > This specification describes an optimized expression of the semantics > of the Hypertext Transfer Protocol (HTTP), referred to as HTTP > version 2 (HTTP/2) ## 2015 May 14 ??? On Tuesday this was my only slide. So if at any point it looks like these slides are new to me it's because they are. --- class: middle ## **Semantics** > the meaning of a word, phrase, or text --- class: middle, center  --- class: middle ## Goals - Same request response model - No new method - No new headers (although some deprecated) - URL's have the same meaning - No change in use for the application (probably not true.) --- class: middle ## **Optimized** - binary protocol - header compression - multiplexing streams - flow control - server push - stream priority --- class: middle, center ## Comparison <iframe width="560" height="315" src="https://www.youtube.com/embed/gqUCeGkTYjY" frameborder="0" allowfullscreen></iframe> --- class: middle ## Today's **survey**: - No value in HTTP/2? - Would like to use HTTP/2? - Currently using HTTP/2? --- class: middle ## Can I use it **Today**? --- class: middle, center # Browser  --- class: middle ## Server side - Cowboy - Lucid - Chatterbox --- class: middle ## Cowboy **2.0** - 2.0.0-pre.1 released **2014 Oct 4** - ... - ... - ... - 2.0.0-rc.1 released **2017 July 24** ??? Number of features missing till 2.1 such as trailers. In fairness there are good reasons why it has been slow when considering backwards compatibility --- class: middle ## **Ace** ### HTTP/2 client and server for Elixir - Focused on making efficient streaming easy - Implemented without support for HTTP/1.x *(now in the works)* ??? The lack of support for interop with HTTP/1.x has made things much easier. It is still useful in a variety of locations, such as gRPC which we come back to later. --- class: middle, center  --- class: middle ## Request/Response ```elixir Ace.Request.get("/", [{"accept", "text/plain"}]) Ace.Request.post("/", [{"content-type", "text/plain"}], "Hello") ``` ```elixir Ace.Response.new(200, [{"content-type", "text/plain"}], "Hello, World!") Ace.Response.new(304, [], false) ``` --- class: middle ## Streaming? *"Focused on making efficient streaming easy."* --- class: middle ## Request/Response streaming ```elixir Ace.Request.get("/", [{"accept", "text/plain"}]) Ace.Request.post("/", [{"content-type", "text/plain"}], true) ``` ```elixir Ace.Response.new(200, [{"content-type", "text/plain"}], true) Ace.Response.new(304, [], false) ``` --- class: middle ## Client side ```elixir alias Ace.HTTP2.Client {:ok, client} = Client.start_link("example.com") {:ok, stream} = Client.stream(client) :ok = Client.send_request(stream, request) :ok = Client.send_data(stream, "Hello, ") :ok = Client.send_data(stream, "World!", true) ``` *Helpers such as `Client.send_sync/2` exist when streaming not important* ??? stream evidence of underlying changes --- class: middle ## Server side ```elixir defmodule MyProject.WWW do use GenServer alias Ace.HTTP2.Server def start_link(greeting) do GenServer.start_link(__MODULE__, greeting) end def handle_info({stream, %Ace.Request{method: :GET, path: "/"}}, greeting) do response = Ace.Response.new(200, [], greeting) Server.send_response(stream, response) end def handle_info({stream, _request}, _state) do response = Ace.Response.new(404, [], false) Server.send_response(stream, response) end end ``` *Helpers that allow applications to work at a higher level are available.* --- class: middle ## Running Servers ```elixir application = {MyProject.WWW, ["Hello, World!"]} options = [ port: 8443, certfile: "path/to/certfile" keyfile: "path/to/keyfile" ] {:ok, pid} = Ace.HTTP2.Service.start_link(application, options) ``` *Manual start service. In production add to supervision tree* --- class: middle, center  --- class: middle # Implementation How are these request and responses delivered faster with HTTP/2 and Elixir? --- class: middle - **binary protocol** - header compression - multiplexing streams - flow control - server push - stream priority --- class: middle ## **Binary** protocol - ✔ easier to parse, ("\r\n" "\r" "\n") - ✔ more compact - ✔ less error-prone, (no request splitting attack) - ✘ harder to debug --- class: middle ## Binaries in **Elixir** ```elixir iex(1)> "Hello " <> town = "Hello London" "Hello London" iex(2)> town "London" ``` --- class: middle ## Binaries in **Elixir** ```elixir iex(1)> <<value::16>> = <<1, 1>> <<1, 1>> iex(2)> value 257 ``` --- class: middle ## Binaries in **Elixir** ```elixir iex(1)> <<l::8, part::binary-size(l), rest::binary>> = <<2, "Elixir">> <<2, 69, 108, 105, 120, 105, 114>> iex(2)> l 2 iex(3)> part "El" iex(4)> rest "ixir" ``` --- class: middle ```elixir ## Binaries in **Elixir** iex(5)> <<l::8, part::binary-size(l), rest::binary>> = <<8, "Elixir">> ** (MatchError) no match of right hand side value: "\bElixir" ``` --- class: middle ## HTTP/2 **Frames** > The basic protocol unit in HTTP/2 is a frame (Section 4.1). Each frame type serves a different purpose. --- class: middle ## **Frame** Format ```txt +-----------------------------------------------+ | Length (24) | +---------------+---------------+---------------+ | Type (8) | Flags (8) | +-+-------------+---------------+-------------------------------+ |R| Stream Identifier (31) | +=+=============================================================+ | Frame Payload (0...) ... +---------------------------------------------------------------+ ``` --- class: middle ## **Frame** parsing ```elixir def parse_from_buffer( << length::24, type::8, flags::bits-size(8), _R::1, stream_id::31, payload::binary-size(length), rest::binary >>, max_length: max_length) when length <= max_length do {:ok, {{type, flags, stream_id, payload}, rest}} end ``` *Deconstruct HTTP/2 frames from a binary stream* --- class: middle ## **Frame** parsing ```elixir def parse_from_buffer( <<length::24, _::binary>>, max_length: max_length) when length > max_length do {:error, :frame_size_error} end def parse_from_buffer( buffer, max_length: _) when is_binary(buffer) do {:ok, {nil, buffer}} end ``` *Handle cases when no valid frame available* --- class: middle - binary protocol - header compression - **multiplexing streams** - flow control - server push - stream priority --- class: middle ## **Multiplexed** Streams > Streams are largely independent of each other, so a blocked or stalled request or response does not prevent progress on other streams. --- class: middle, center  --- class: middle ## **Multiplexed** Streams <!-- - ✔ less error-prone - ✘ harder to debug --> - ✔ Reduces round trips to establishing a new TCP connection - ✔ 'solves' head of line blocking in HTTP/1.1 pipelining *Head of line blocking is still present in TCP.* --- class: middle, center  ??? http://blog.scottlogic.com/wreilly/assets/http2/http-timing-diagram.png --- class: middle ## Connection **Isolation** http://joearms.github.io/2016/03/13/Managing-two-million-webservers.html --- class: middle, center  --- class: middle ## Stream **Isolation** Every stream in **Ace** has a dedicated server. --- class: middle ## Client ```elixir {:ok, client} = Client.start_link("http2.golang.org") {:ok, stream} = Client.stream(client) ``` *Isolating streams is a client's responsibility* --- class: middle ## Server ```elixir def init({listen_socket, {mod, args}, settings}) do {:ok, stream_supervisor} = Supervisor.start_link( [Supervisor.Spec.worker(mod, args, restart: :transient)], strategy: :simple_one_for_one ) # ... rest of setup end ``` *Run when starting a connection* --- class: middle ## Server ```elixir def open_stream(connection, stream_id) do initial_window = connection.remote_settings.initial_window_size supervisor = connection.stream_supervisor {:ok, worker} = Supervisor.start_child(supervisor, []) stream = Stream.idle(stream_id, worker, initial_window) {:ok, put_stream(connection, stream)} end ``` *Run for every new stream* --- class: middle ## **Streaming** - ✔ Data can be sent as soon as it is available - ✔ Large requests or responses do not need to be buffered to memory - ✘ Not so simple to work with, response != func(request) - ✘ Many intermediate states --- class: middle ## **Streaming** ```elixir receive REQUEST receive DATA send RESPONSE receive DATA send PROMISE send DATA receive TRAILER send DATA send END_STREAM ``` ??? worker might send any or none of the following before the request has finished sending. worker or client may choose to terminate the stream early worker may terminate unexpectedly --- ```txt +--------+ send PP | | recv PP ,--------| idle |--------. / | | \ v +--------+ v +----------+ | +----------+ | | | send H / | | ,------| reserved | | recv H | reserved |------. | | (local) | | | (remote) | | | +----------+ v +----------+ | | | +--------+ | | | | recv ES | | send ES | | | send H | ,-------| open |-------. | recv H | | | / | | \ | | | v v +--------+ v v | | +----------+ | +----------+ | | | half | | | half | | | | closed | | send R / | closed | | | | (remote) | | recv R | (local) | | | +----------+ | +----------+ | | | | | | | | send ES / | recv ES / | | | | send R / v send R / | | | | recv R +--------+ recv R | | | send R / `-----------<| |>-----------' send R / | | recv R | closed | recv R | `-----------------------<| |>----------------------' +--------+ ``` --- class: middle ## **Streaming** - Stream state lives in connection process, controlled by Ace. - Stream worker is application controlled, completly unknown. --- class: middle ## Starting a **state machine** ```elixir def idle(stream_id, worker, initial_window_size) do new(stream_id, {:idle, :idle}, worker, initial_window_size) end def reserve(stream_id, worker, initial_window_size) do new(stream_id, {:reserved, :closed}, worker, initial_window_size) end def reserved(stream_id, worker, initial_window_size) do new(stream_id, {:closed, :reserved}, worker, initial_window_size) end ``` --- class: middle ## Starting a **state machine** ```elixir defp new(stream_id, status, worker, initial_window_size) do monitor = Process.monitor(worker) %__MODULE__{ id: stream_id, status: status, worker: worker, monitor: monitor, initial_window_size: initial_window_size, incremented: 0, sent: 0, queue: [], } end ``` --- class: middle ## Inbound **messages** ```elixir def receive_headers(stream, headers, end_stream) do case stream.status do {:idle, :idle} -> forward(stream, headers_to_request(headers, end_stream)) {:ok, {:idle, :open}} {local, :idle} -> forward(stream, headers_to_response(headers, end_stream)) {:ok, {local, :open}} {local, :reserved} -> forward(stream, headers_to_response(headers, end_stream)) {local, :open} forward(stream, headers_to_trailers(headers)) {:ok, {local, :closed}} {_local, :closed} -> {:error, {:stream_closed, "Headers received on closed stream"}} end |> # update stream state end ``` ??? Can see this works for client and server states --- class: middle ## Outbound **messages** ```elixir def send_data(stream, data, end_stream) do case stream.status do {:open, _remote} -> queue = [%{data: data, end_stream: end_stream}] new_stream = %{stream | queue: stream.queue ++ queue} final_stream = if end_stream do process_send_end_stream(new_stream) else new_stream end {:ok, final_stream} {:_, :_} -> {:error, :protocol_error} end end ``` --- class: middle - binary protocol - header compression - multiplexing streams - **flow control** - server push - stream priority --- class: middle ## Flow **control** > Flow control and prioritization ensure that it is possible to efficiently use multiplexed streams. Flow control (Section 5.2) helps to ensure that only data that can be used by a receiver is transmitted. --- class: middle ## Flow **control** ```elixir def send_data(stream, data, end_stream) do case stream.status do {:open, _remote} -> queue = [%{data: data, end_stream: end_stream}] new_stream = %{stream | queue: stream.queue ++ queue} final_stream = if end_stream do process_send_end_stream(new_stream) else new_stream end {:ok, final_stream} {:_, :_} -> {:error, :protocol_error} end end ``` --- class: middle ## Flow **control** ```elixir def send_available(connection) do Enum.reduce(connection.streams, {[], connection}, fn ({_id, stream}, {out_tray, connection}) -> {frames, connection} = pop_stream(stream, connection) {out_tray ++ frames, connection} end) end ``` --- class: middle ## Flow **control** ```elixir def consume_frame(frame = %Frame.WindowUpdate{stream_id: 0}, state) do new_window = state.outbound_window + frame.increment # 2^31 - 1 if new_window <= 2_147_483_647 do new_state = %{state | outbound_window: new_window} {frames, newer_state} = send_available(new_state) {:ok, {frames, newer_state}} else {:error, {:flow_control_error, "Total window size exceeded max allowed"}} end end ``` --- class: middle - binary protocol - **header compression** - multiplexing streams - flow control - **server push** - **stream priority** --- class: middle # **Lessons** Learned --- class: middle ## Write **library** applications ```elixir setup do opts = [port: 8444, # ... etc] assert {:ok, service} = Service.start_link({ForwardTo, [self()]}, opts) assert_receive {:listening, ^service, port} {:ok, %{port: port}} end ``` *Reusable test setup** --- class: middle ## Don't use **config.exs** ??? This might be a slightly controversial point. If there is no global stuff there is not much to configure --- class: middle ## Limit your API ```elixir defmodule MyProject.Internal do @moduledoc false end ``` ??? I have an HPack wrapper so I can switch out which version I use. This is not part of the public API. --- class: middle # Next steps for **Ace** --- class: middle ## **Raxx** adapter - webserver interface focusing on simplicity - request -> response ##### *In progress* ??? There is an upgrade in progress on this to include streaming and maintain purity. --- class: middle ## **Plug** adapter - expose server push - expose flow control - patch send_file ##### *Help wanted* ??? Work by gazler and co on this later on --- class: middle ## **Clients** - pigeon for Apple push notifications --- class: middle, center  --- class: middle ## **GRPC** - Only HTTP/2 ✔ - Client and Server required ✔ ##### *Help wanted* ??? I think this is a particularly interesting usecase for Ace. There is no need for the cost associated with being able to fallback to HTTP/1.x --- class: middle ## **GenStage** - Distributed stream processing - HTTP/2 backpressure ##### *Help wanted* --- class: middle, center  --- class: middle ## **Docs** ##### *Beginner* ## **Dialyzer** ##### *Help wanted* ## **erlang interop** ##### *Help wanted* --- class: middle # **Thank you** ## *name -* Peter Saxton ## *@internets -* CrowdHailer ### Lead Developer at [paywithcurl.com](https://paywithcurl.com/) #### *Using Elixir everyday* --- class: middle ## See the code - **[github.com/crowdhailer/ace](https://github.com/crowdhailer/ace)**<br> Elixir servers for TCP and TLS(ssl) endpoints. *Also* - **[hexdocs.pm/raxx](https://hexdocs.pm/raxx)**<br> A pure webserver interface for Elixir. - **[hexdocs.pm/tokumei](https://hexdocs.pm/tokumei)**<br> Tiny yet MIGHTY Elixir webframework.